Artificial Intelligence (AI) is rapidly emerging as a transformative force within healthcare. The potential benefits are significant, promising improvements in diagnostic accuracy, personalized treatment plans, administrative efficiency, and patient outcomes. Governments and healthcare organizations recognize AI’s capacity to reshape operations, making them safer and more reliable. The excitement around AI is evident from faster drug development to cost savings.

However, this wave of enthusiasm often obscures the obstacles to widespread, effective, and ethical AI implementation. Like the early days of electronic health records (EHRs), AI hype can lead to a sensationalized view, marginalizing the real technological, operational, safety, and ethical concerns. Harnessing AI’s power requires a clear-eyed assessment of its limitations and risks. The challenges of AI in healthcare are multifaceted and demand careful consideration.

This blog post dives into these critical hurdles. We will explore the complex ethical and privacy risks of using AI with sensitive patient data. We will also examine the technical barriers to data quality, security, and system integration. Furthermore, we will analyze concerns surrounding the reliability and validation of AI algorithms, the difficult questions of liability when errors occur, and the barriers hindering broader adoption in clinical settings. Understanding AI challenges in healthcare is the first step toward navigating them successfully and reliably.

From AI-driven diagnostics and personalized medicine to streamlined administrative workflows, SPsoft offers end-to-end expertise in developing and integrating cutting-edge AI techs. Contact us to discuss your needs!

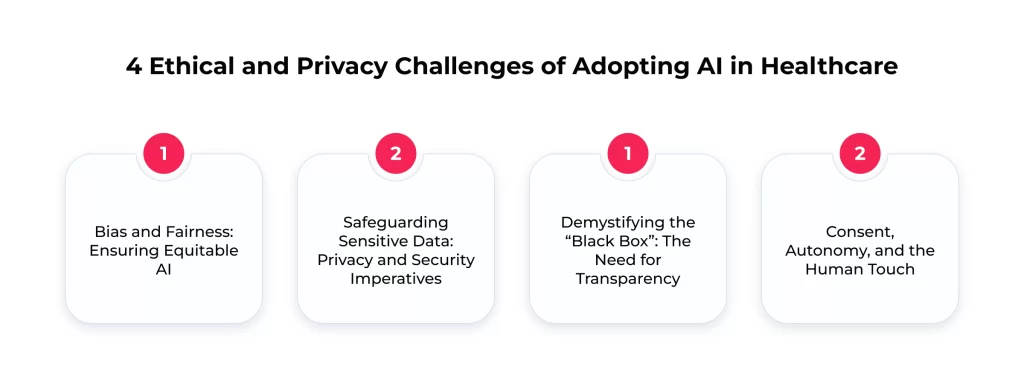

AI in Healthcare Ethical and Privacy Challenges

As AI becomes increasingly woven into healthcare delivery, establishing strong ethical governance is not merely advisable but an absolute necessity. AI systems are being applied in highly sensitive areas, including direct patient care and the handling of confidential health information. This integration demands a proactive approach to address the inherent AI in healthcare ethical and privacy challenges.

International bodies like the World Health Organization (WHO) have recognized this urgency. They issue guidance to navigate the ethical complexities, particularly concerning newer GenAI models. Ensuring that AI serves the public benefit needs confronting bias, privacy, transparency, and consent head-on.

Bias and Fairness: Ensuring Equitable AI

One of the ethical red flags associated with AI in healthcare is the potential for bias. AI systems learn from data. Thus, AI can perpetuate or amplify these inequities if the information used for training reflects existing societal biases or is not representative of the diverse patient population. Bias can creep in through various means:

- The training data might over or underrepresent certain demographic groups

- Historical inequities embedded in medical records can be mirrored in algorithms

- The rules used to construct the algorithms might be inherently biased

Even variations between the controlled training environment and the complexities of real-world applications can introduce bias. The consequences of such bias are severe. It can often lead to unequal treatment, misdiagnosis, or underdiagnosis for specific populations, widening existing health disparities rather than closing them. That represents a failure of ethical responsibility and critically undermines patient trust.

When patients, particularly those from marginalized communities, perceive AI systems as unfair or biased against them, their confidence in the technology erodes. This erosion of trust is a key barrier, especially given that studies already indicate significant patient skepticism, with reports suggesting over 60% of patients lack trust in healthcare AI. The connection is direct: biased AI leads to unfair outcomes, which fuels patient mistrust, hindering adoption. So, addressing algorithmic bias through strategies like inclusive data collection and rigorous bias mitigation techniques is fundamental for fairness and building the necessary trust.

Safeguarding Sensitive Data: Privacy and Security Imperatives

AI’s reliance on vast quantities of data presents immense privacy and security challenges in healthcare. Healthcare organizations are sensitive patient information custodians, making them attractive cyberattack targets. Integrating AI systems, which process and analyze this data, introduces new vulnerabilities and expands the potential attack surface. Key privacy risks include unauthorized access through data breaches or cyberattacks targeting AI systems and the potential misuse of data. That may occur when sensitive information is transferred between institutions or to third-party AI developers without sufficient oversight.

While the increasing use of cloud storage for healthcare data offers benefits, it also heightens security risks if not managed meticulously. Patients are acutely aware of these risks, with a large portion expressing concern that AI could compromise the security of their health records.

Adherence to stringent data protection regulations, such as the US Health Insurance Portability and Accountability Act (HIPAA), is non-negotiable. Navigating these regulations while enabling the data flows necessary for AI can be complex. The WHO reinforces the importance of privacy, framing it as a basic human right that must be protected within AI governance frameworks.

Protecting patient data in the age of AI requires a multi-layered approach. Main strategies are:

- Implementing robust end-to-end encryption for data in transit and at rest

- Establishing strict access controls and authentication mechanisms to limit data exposure

- Conducting regular security audits and vulnerability assessments

- Providing complex training for staff on privacy protocols and best security practices

Exploring and implementing privacy-preserving AI techniques, which allow analysis without exposing raw, sensitive data, is also becoming increasingly important. That highlights a core tension: AI thrives on data, yet the ethical and legal mandate is to protect that data rigorously.

Demystifying the “Black Box”: The Need for Transparency

Many advanced AI systems, particularly those based on complex machine learning or deep learning models, operate as “black boxes.” Their internal decision-making processes are opaque, making it difficult or impossible to determine precisely how they arrive at a specific output or recommendation. This lack of transparency poses AI challenges in healthcare.

When clinicians cannot understand the reasoning behind an AI suggestion, it understandably breeds mistrust and hesitation to rely on the technology, especially for critical decisions. Patients, too, may be wary of treatments or diagnoses generated by systems whose logic is hidden. Furthermore, the opacity makes identifying potential errors, biases, or flaws within algorithms challenging and complicates efforts to establish accountability when things go wrong.

The WHO’s ethical guidelines explicitly call for transparency and intelligibility in AI systems used in healthcare. The lack of transparency is consistently cited as a significant barrier to building the trust necessary for widespread AI adoption. Consequently, there is a growing demand for explainable AI (XAI) – methods and techniques designed to make AI decisions more clear to humans. Achieving greater transparency and proper communication about what AI systems can and cannot do reliably is essential for fostering confidence and ensuring responsible use.

Consent, Autonomy, and the Human Touch

The ethical landscape of AI in healthcare also involves fundamental principles of patient rights and the nature of care itself. Obtaining truly informed consent presents unique challenges when AI is involved. Patients have the right to autonomy – to make informed decisions about their care. For consent to be meaningful, patients must understand that AI might be used, how their health data will be collected, stored, and utilized by these systems, and what role AI plays in the diagnostic or treatment process. Communicating the complexities of AI in an accessible way to ensure genuine understanding is a significant hurdle. The WHO guidance strongly emphasizes the need to uphold patient dignity and autonomy.

Beyond consent, there are valid concerns about AI’s potential impact on the essential human element of healthcare. An overreliance on technology could lead to a depersonalization of care, diminishing the empathy, communication, and trust that form the bedrock of the patient-provider relationship. Surveys reflect this anxiety, with a majority of US adults (57%) fearing that AI will negatively affect their relationship with their healthcare providers. Preserving the human connection while leveraging AI’s capabilities is a delicate balancing act.

Additionally, the question of data ownership – who ultimately owns and controls the vast datasets used to train and operate healthcare AI – remains an unresolved ethical issue with competing interests among providers, developers, and data aggregators.

Technical Roadblocks as AI Challenges in Healthcare Adoption

Beyond the critical ethical considerations, the practical implementation of AI in healthcare faces substantial technical hurdles. These challenges relate to the data itself, the ability of AI systems to integrate with existing infrastructure, and the complexities of ensuring they function reliably in diverse clinical environments. Overcoming such technical roadblocks is essential for translating AI’s potential into tangible clinical benefits.

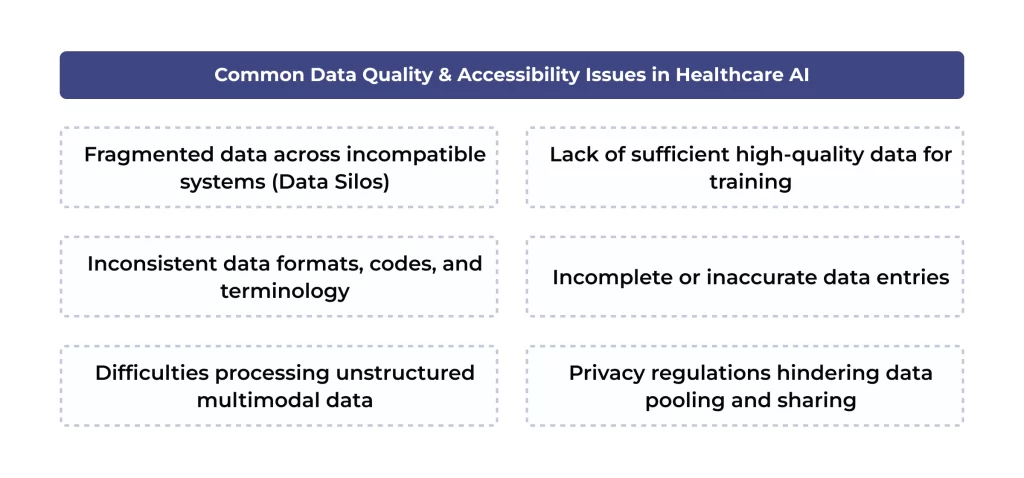

The Data Dilemma

Data is the lifeblood of AI, but healthcare information presents a unique set of challenges. AI algorithms, particularly ML models, require vast amounts of high-quality data to train effectively and make accurate predictions. However, healthcare data is frequently plagued by issues that hinder AI development and deployment. It is often fragmented, residing in isolated systems across departments or institutions, making it difficult to get a complete picture of a patient’s history. Data quality can be poor, containing inaccuracies, inconsistencies, or missing details.

The lack of standardization is a significant problem. Different systems often use proprietary formats, inconsistent codes, varying terminology, and even free text instead of structured fields, making data exchange and aggregation extremely difficult.

Besides, much valuable clinical information exists in unstructured formats, such as physicians’ notes, medical images (X-rays, MRIs), audio recordings, and sensor readings. Processing and extracting meaningful insights from this multimodal, unstructured information is a tremendous challenge for traditional analytics and many AI algorithms. However, newer AI techniques like natural language processing show promise. Accessing and pooling data across organizations is further complicated by strict privacy regulations and the general incompatibility of EHR systems.

Addressing this data dilemma requires concerted effort. Strategies include:

- Implementing robust data cleaning and normalization processes

- Enforcing consistent data entry protocols

- Adopting standardized terminologies (like SNOMED CT or LOINC)

- Fostering collaboration between institutions for responsible data sharing

Investing in wearables and remote monitoring techs can also help generate high-quality data streams. The fragmented nature of healthcare data is a fundamental barrier limiting AI’s potential. Because data is often siloed in incompatible legacy systems, assembling the large, diverse datasets needed to train robust AI models is difficult. This reliance on limited or non-representative data is a direct cause of algorithmic bias and makes it incredibly hard to validate AI performance across different patient populations. Thus, tackling data fragmentation through standardization and interoperability is a main requirement for building trustworthy AI.

Integration & Interoperability

A major technical AI challenge in healthcare is integrating new applications with the complex web of healthcare IT infrastructure. Many healthcare organizations rely on legacy systems, some decades old, which often use outdated technology and proprietary data formats. These systems were typically not designed to communicate with each other, let alone with modern AI tools, creating significant integration hurdles.

The lack of interoperability (the ability of systems and software applications to communicate, exchange data, and use the information exchanged) is a persistent barrier. Even within a single hospital, departments might use systems that cannot easily share information, leading to data silos. This lack of seamless data flow hinders efficiency, prevents clinicians from having a complete view of patient information, and can force cumbersome workarounds. For example, clinicians might have to manually input or print out AI results if an AI tool cannot directly receive data from or send results to the main EHR system.

Addressing interoperability requires strategic investments and a commitment to standards. Adopting standardized data formats and communication protocols, most notably HL7 FHIR, is crucial for enabling different systems to talk to each other. Utilizing Application Programming Interfaces (APIs) can create bridges between legacy systems and newer AI platforms without requiring complete system overhauls. An API-first architecture allows for more flexible and scalable integration. Cloud computing also offers solutions by providing platforms with built-in interoperability features for easier data sharing and real-time access.

Furthermore, government initiatives, like the 21st Century Cures Act in the US, which mandates improved data sharing and prohibits information blocking, promote greater interoperability. After all, collaboration between technology vendors and healthcare organizations is vital to creating interoperable solutions.

The Trust Factor: Reliability, Validation, and Accuracy Challenges

Even if AI systems are ethically designed and technically integrated, their adoption hinges on a fundamental element: trust. Clinicians and patients must be confident that the tools are reliable, accurate, and safe, especially for critical medical decisions. However, ensuring this reliability presents issues. Concerns about the accuracy of AI predictions, the potential for performance to degrade over time, and the rigor of validation processes are central to building this trust.

How Reliable Are AI Predictions in Real-World Care?

A primary concern surrounding AI challenges in healthcare is the reliability and accuracy of its outputs. While AI holds promise for enhancing diagnostic accuracy, errors can and do occur. Inaccurate AI-generated predictions or recommendations lead to misdiagnoses, inappropriate treatments, or delays in care, potentially causing patient harm. The risk of inaccuracy is cited as the most significant concern regarding generative AI technologies.

A particularly insidious challenge is the potential for AI model performance to degrade over time. Models are typically trained on data from a specific point in time. Still, the clinical environment is constantly changing – patient populations shift, diseases evolve, clinical guidelines are updated, and even IT infrastructure or data acquisition methods change.

This “data drift” can cause a model’s accuracy to decline silently after deployment. Worryingly, there is often no systematic monitoring to detect this degradation, so it might only be noticed after errors have already impacted patient care, potentially relying on variable clinician intuition. Such a hidden danger underscores that initial validation alone is insufficient. Therefore, ongoing performance monitoring is crucial for safety.

Another related risk is “automation bias”. This cognitive bias occurs when humans, in this case clinicians, place excessive trust in the output of automated systems like AI. Over-reliance can lead clinicians to suspend their critical judgment, potentially overlooking errors made by the AI or failing to consider alternative diagnoses or treatments. That highlights the need for AI to augment, not replace, human expertise and judgment. The relevant reliability concerns directly feed into patient skepticism. Many patients remain uncomfortable with AI making critical decisions, and a significant portion fear AI could lead to worse health outcomes.

Validating AI: Ensuring Safety and Efficacy

Rigorous validation is crucial to ensure healthcare AI algorithms are safe, effective, and perform as expected in real-world settings. Validation involves assessing an algorithm’s performance, typically on a dataset separate from the one used for initial training. However, the validation process itself faces numerous AI challenges in healthcare.

One major issue is the difficulty hospitals face in independently validating AI tools. Accessing sufficient quantities of high-quality, labeled patient data representative of the specific population can be a significant hurdle due to data silos, privacy constraints, and resource limitations.

Besides, the data used for validation by vendors or researchers can be biased. Suppose an algorithm is validated using a homogeneous data sample that does not reflect the diversity of the patient population where it will be deployed. In that case, the validation results can be misleading. They mask poor performance in certain demographic groups. The FDA is increasingly emphasizing the need for diverse datasets in validation, moving away from allowing validation solely on training data.

A critical gap exists between regulatory authorization and demonstrated clinical effectiveness. Research has shown that a substantial percentage (around 43%) of AI medical devices authorized by the FDA lacked publicly available clinical validation data at the time of analysis.

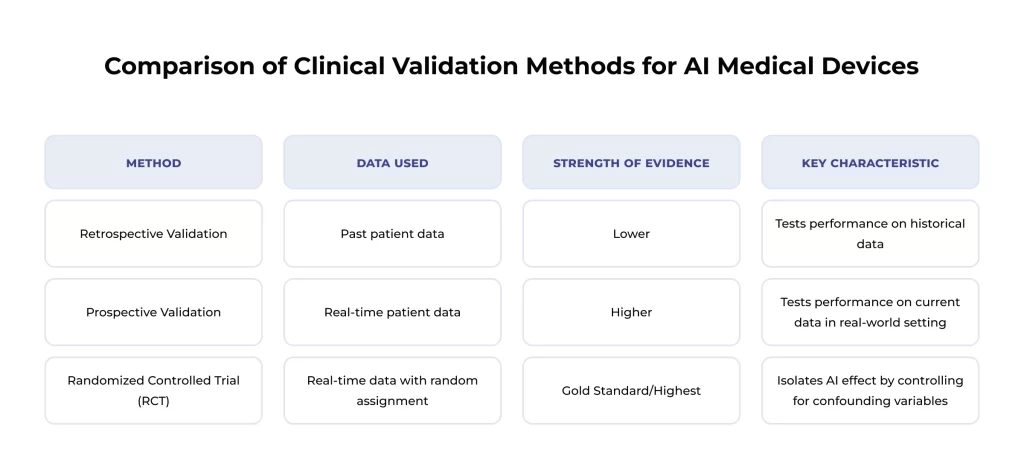

That highlights a lack of transparency. It is also crucial to understand the type of validation performed. Retrospective validation uses past data, while prospective validation uses real-time data, generally providing more substantial evidence. Randomized controlled trials (RCTs), a subset of prospective studies, are considered the gold standard as they rigorously control for variables to isolate the AI’s effect. The lack of clarity and public availability regarding these validation specifics undermines the credibility of some AI tools.

Given the dynamic nature of healthcare and the risk of model degradation, constant monitoring and re-validation after deployment are essential. Initiatives like the PRECISE-AI program aim to develop automated tools to continuously monitor AI performance, detect degradation, and even correct it without constant human oversight. Ensuring robust, transparent, and ongoing validation processes is key to assuring the safety and efficacy of AI in clinical practice.

Barriers Impeding AI Adoption in Healthcare

Even when ethical guidelines are considered, technical integration is feasible, and reliability is addressed, the widespread adoption of AI in healthcare faces tremendous inertia. Several practical barriers related to cost, regulation, standardization, and the human element slow down the integration of these powerful techs into routine clinical practice. Overcoming these adoption hurdles is vital for realizing AI’s benefits and represents ongoing challenges of AI in healthcare.

The Economics of AI: Costs and Investments

Implementing AI in healthcare requires substantial financial investment. The costs associated with developing or acquiring AI software, integrating it with existing systems, training staff, and ongoing maintenance can be significant. Such high costs represent a major barrier, particularly for smaller clinics or hospitals operating on tight budgets.

However, these upfront investments must be weighed against the potential for considerable long-term cost savings and return on investment (ROI). Studies estimate widespread AI adoption could save the US healthcare system between $200 billion and $360 billion annually within five years. These savings are projected to come from various sources:

- Increased operational efficiency (e.g., optimizing operating room schedules)

- Reduction of administrative burdens (e.g., automating documentation)

- Optimization of supply chains

- Improved patient outcomes leading to lower downstream costs

Gartner predicts, for example, that AI can reduce clinicians’ time spent on documentation by 50% by 2027. The rapidly growing global market for AI in healthcare is projected to soar past $600 billion by 2034, indicating strong belief in the tech’s value proposition despite the adoption costs. Showing clear ROI, starting with lower-cost “quick wins,” is key for justifying investment.

Regulatory Hurdles and Standardization Gaps

A complex and evolving regulatory landscape further complicates the path to AI adoption. Healthcare organizations must:

- Navigate existing regulations like HIPAA

- Secure approvals from bodies like the FDA for AI-driven medical devices

- Anticipate potential new AI-specific legislation at state, national, or international levels

This regulatory complexity can be daunting, requiring resources and expertise to ensure compliance. The WHO has called for strong government leadership to establish clear regulatory frameworks to guide responsible AI development and use.

Compounding the regulatory challenge is the persistent lack of universal standards, particularly concerning data formats and interoperability. The absence of widely adopted standards makes it difficult to share data securely, integrate AI tools seamlessly with existing systems, and ensure that different techs can work together effectively. This lack of standardization acts as a significant drag on adoption, increasing complexity and cost.

The Human Factor: Workforce Readiness and User Trust

The success of AI in healthcare also depends on people – both the professionals who use the technology and the patients who are affected by it. Some human factors currently act as critical barriers to adoption.

A major issue is the workforce skills gap. Many healthcare professionals lack adequate knowledge and understanding of AI techs, including their capabilities, limitations, and potential pitfalls. There is a pressing need for complex training and education beyond basic technical operation to include critical appraisal skills – knowing when to trust AI, when to question it, and how to interpret its outputs correctly. Concurrently, a documented shortage of IT staff with specialized AI and ML skills makes it difficult for medical organizations to build or manage relevant systems effectively.

Resistance to change and skepticism among potential users also impede adoption. Clinicians may resist AI due to:

- Concerns about workflow disruption

- Lack of trust in the tech’s reliability or fairness

- Fear of deskilling or role replacement

- The perceived added burden of using new tools

Finally, the practical integration of AI into established clinical workflows is a critical challenge. If an AI tool is cumbersome, requires changes to existing processes, or does not seamlessly fit into how clinicians work, it is unlikely to be adopted, even if technically sound. Poor integration can lead to frustration, inefficiency, and, ultimately, technology abandonment.

Conversely, AI tools that demonstrably streamline workflows, reduce administrative tasks, and enhance clinician capabilities are far more likely to gain acceptance. That highlights how crucial user experience and thoughtful workflow integration are. After all, how the AI fits into the human process directly impacts trust and adoption. Successfully navigating the human factors requires a user-centric approach to AI adoption, focusing on change management, training, workflow design, and technology.

Final Thoughts

AI holds immense promise for reshaping healthcare, offering pathways to improved diagnostics, more personalized treatments, enhanced operational efficiency, and better patient outcomes. However, as this article has detailed, the journey towards realizing this potential is fraught with significant and interconnected challenges of AI in healthcare. From navigating the complex ethical tightrope to overcoming formidable technical hurdles, the obstacles are substantial.

Building trust in AI systems requires addressing concerns about reliability, ensuring rigorous and continuous validation, and establishing clear lines of accountability when errors inevitably occur. The current ambiguity surrounding liability is a brake on both innovation and adoption. Finally, practical barriers related to cost, regulatory complexity, staff readiness, and user acceptance must be systematically dismantled to facilitate broader implementation. These AI challenges in healthcare cannot be ignored or underestimated.

Integrating AI into the healthcare ecosystem needs a concerted, collaborative, and responsible approach. That should cover active engagement between tech developers, medical providers, regulatory bodies, policymakers, ethicists, and patients. Thus, robust governance frameworks, ethical-by-design principles, a commitment to transparency, etc., are essential prerequisites.

While the issues of AI in healthcare are undeniable, they are not insurmountable. The medical community can navigate the complexities by acknowledging these hurdles openly, investing in solutions, fostering collaboration, and prioritizing patient safety and equity. Addressing them proactively and thoughtfully will be key to leveraging AI’s transformative power.

SPsoft has a proven track record of delivering impactful AI solutions that address the unique challenges of healthcare. Our expert team specializes in developing and integrating AI to enhance clinical decision-making!

FAQ

Can we trust AI with life-and-death decisions?

Trusting AI with critical decisions is complex and requires caution. Current challenges of AI in healthcare, such as the potential for algorithmic bias, the “black box” nature of some systems hindering transparency, etc., all contribute to justifiable skepticism. While AI can offer powerful analytical capabilities, it should currently be viewed as a tool to augment, not replace, human clinical judgment. Trust must be earned through demonstrable reliability, transparency, robust validation, ethical safeguards, and clear accountability structures. Human oversight remains essential, especially in life-and-death situations.

Who is responsible if AI makes a medical mistake?

Assigning responsibility for AI-driven medical errors is one of the most significant unresolved challenges. There is currently no simple answer, and liability is likely to be determined on a case-by-case basis. Potential responsibility could fall on:

1. The clinician who used the AI tool (for failing to exercise due diligence)

2. The AI developer (if the algorithm was flawed or inadequately tested)

3. The healthcare organization (for improper implementation or inadequate training)

The lack of specific laws and regulations governing AI liability further complicates this issue. Clear legal and regulatory frameworks are needed to establish accountability.

How is patient data protected when used by AI systems?

Protecting sensitive patient data is key when implementing AI. Key protection methods include:

– Encryption. Using strong encryption for data both when it’s stored (at rest) and when it’s being transmitted (in transit).

– Access Controls. Implementing strict controls and authentication mechanisms ensures that only authorized personnel can access sensitive data.

– Regular Audits. Conducting frequent audits of security systems and practices to identify and address vulnerabilities.

– Compliance. Adhering to data privacy regulations like HIPAA and evolving global standards.

– Employee Training. Educating staff on data security best practices and privacy requirements.

– Privacy-Preserving Techniques. Exploring methods like federated learning or differential privacy that allow AI training without exposing raw patient data.

What are the privacy risks of using AI in hospitals or clinics?

Using AI introduces specific privacy risks due to the technology’s reliance on large datasets. Key risks include:

– Data Breaches. AI systems and their data can be targets for cyberattacks, potentially exposing vast amounts of sensitive patient health information (PHI).

– Unauthorized Access. Weak access controls or vulnerabilities could allow unauthorized individuals to view or manipulate patient data within AI systems.

– Data Misuse. Patient data collected for one purpose might be misused for another, especially if data-sharing practices between settings or vendors lack sufficient oversight.

– Cloud Security Vulnerabilities. Storing or processing healthcare data in the cloud for AI applications introduces risks if the cloud environment is not adequately secured.

– Re-identification. Even if data is “anonymized,” there might be risks of re-identifying individuals if not done correctly, especially when combining datasets.

Are AI predictions reliable enough for real-world healthcare?

The reliability of AI predictions is still a major challenge for AI in healthcare. While AI can achieve high accuracy in specific, controlled tasks, several factors affect real-world reliability:

– Accuracy Issues. AI models are not infallible and can make errors.

– Model Degradation. Performance can decline as clinical conditions change, a risk that is not always adequately monitored.

– Validation Gaps. Many AI tools lack robust, transparent clinical validation demonstrating effectiveness across diverse populations.

– Bias. Underlying biases in data or algorithms can lead to unreliable or unfair predictions for specific groups.

– Automation Bias. Over-reliance by clinicians can lead to missed errors. AI predictions should be used cautiously, ideally as decision support tools requiring careful review and validation by human experts rather than as standalone decision-makers.

How do we ensure AI systems are continuously updated and validated?

Ensuring ongoing safety and efficacy requires moving beyond one-time validation. Key strategies include:

1. Continuous Monitoring. Implementing systems to actively monitor the performance of deployed AI models in real-time to detect any drop in accuracy or reliability.

2. Regular Re-validation. Establishing protocols for periodically re-validating AI models using current clinical data to ensure they remain accurate and relevant.

3. Model Updating. Developing mechanisms to safely and effectively update AI models when performance degrades, or changes occur in clinical practice or patient populations.

4. Automated Tools. Investing in research and development of tools, like those envisioned by the PRECISE-AI program, that can automatically detect degradation and potentially trigger corrections.

5. Transparent Reporting. Requiring vendors and institutions to report on ongoing monitoring and validation results transparently.

What are the biggest barriers to implementing AI in healthcare settings?

Several significant barriers hinder widespread AI implementation. The biggest include:

– Cost. High costs for development, integration, and maintenance.

– Data Issues. Problems with data quality, quantity, fragmentation, and accessibility.

– Interoperability. Difficulty integrating AI with existing legacy systems.

– Regulation. Navigating complex and evolving regulatory frameworks.

– Workforce Skills. Lack of AI knowledge and skills among healthcare professionals.

– Trust and Acceptance. Skepticism and resistance from clinicians and patients due to safety, privacy, bias, and reliability concerns.

– Workflow Integration. Challenges in seamlessly incorporating AI into complex clinical workflows without disruption.

– Liability Uncertainty. Lack of clarity regarding responsibility for AI errors.

How do we deal with fragmented or incomplete patient data?

Dealing with fragmented and incomplete data is a core technical challenge of AI in healthcare. Strategies include:

1. Data Standardization. Promoting and adopting universal data standards (like HL7 FHIR) to enable easier data exchange and aggregation.

2. Data Integration Platforms. Utilizing technologies like APIs and cloud platforms to connect disparate systems and create more unified data views.

3. Data Cleaning and Preprocessing. Implementing rigorous processes to clean data, handle inconsistencies, and impute missing values where appropriate before feeding it to AI models.

4. Advanced AI Techniques. Employing AI/ML methods designed to handle missing or noisy data or techniques like natural language processing to extract structured information from unstructured text.

5. Collaborative Efforts. Encouraging responsible data-sharing agreements between institutions to create larger, more comprehensive datasets.