Artificial Intelligence (AI) is poised to reshape the healthcare landscape, offering fundamental transformative benefits. The technology enhances diagnostic accuracy, enabling earlier disease detection. It personalizes treatment plans by analyzing complex patient data. AI streamlines administrative workflows like scheduling and billing, freeing human resources, and accelerates drug discovery by analyzing vast datasets. Furthermore, AI optimizes hospital operations, improves resource allocation, and helps tackle global health challenges like expanding access to care. Once futuristic, AI will become an integrated part of modern healthcare delivery.

However, this potential comes with significant risks. AI deployment raises patient safety concerns, as errors can have severe consequences. The need for vast amounts of sensitive patient data creates critical privacy and security challenges. AI algorithms can inherit and amplify societal biases from historical data, leading to health inequities and discrimination. The “black box” problem (opaque decision-making processes) erodes trust among clinicians and patients. Accountability for AI errors is complex, blurring lines of responsibility. Moreover, the rapid pace of AI evolution, especially GenAI, often outpaces regulatory and ethical guidelines.

Navigating this complex terrain requires a structured, systematic approach: AI governance in healthcare. Establishing complex governance frameworks ensures AI innovation proceeds responsibly, ethically, and effectively. After all, strong AI governance in healthcare builds trust and safeguards patient well-being, providing key guardrails for medical innovation.

The Fundamentals of AI Governance

Before focusing on healthcare, you should define the broader concept. What is AI governance? It is a robust system of policies, procedures, standards, ethical guidelines, accountability structures, and oversight mechanisms. Organizations use them to direct the responsible design, development, deployment, and use of AI systems. That involves assigning accountability, defining decision rights, managing risks, setting ethical boundaries, and ensuring AI investments align with organizational values and societal expectations.

The purpose goes beyond rules. AI governance ensures that AI operates with transparency, fairness, accountability, security, and respect for human rights and ethics. It aims to foster a culture of sustained ethical practice and build enduring trust among developers, users, regulators, and the public. This trust is vital for AI adoption.

Leading organizations offer consistent views. Gartner defines it as “the process of assigning and assuring organizational accountability, decision rights, risks, policies and investment decisions for applying AI.” The OECD promotes “responsible stewardship of trustworthy AI” through principles like transparency, fairness, and accountability. Meanwhile, NIST’s AI Risk Management Framework (AI RMF) offers a risk-based approach to maximize benefits while minimizing negative consequences.

These definitions reveal AI governance is about aligning technology with human values and societal norms. The emphasis on ethics, human rights, fairness, and societal benefit alongside technical needs underscores this. The goal is to direct AI toward beneficial, human-centric outcomes, embodying “Responsible AI.”

AI governance must be dynamic. As AI evolves, especially with GenAI creating novel content, frameworks must adapt. Generative AI challenges like potential inaccuracies (“hallucinations”) require updated governance strategies. The OECD updated its AI definition and principles to stay relevant. Effective AI governance requires ongoing evaluation and adaptation.

AI Governance in Healthcare as A Critical Imperative

Applying GenAI principles to medicine yields AI governance in healthcare. This specialized field involves tailoring and implementing governance frameworks for AI tools in clinical practice, hospital operations, medical research, and public health. It demands careful consideration of sensitive patient health information (PHI), the life-altering consequences of AI-influenced clinical decisions, and complex healthcare rules like HIPAA and GDPR.

Robust AI governance in healthcare is paramount. Unlike other sectors, errors or biases in medical AI can have immediate, severe impacts on patient safety and well-being. Governance here requires exceptional rigor, diligence, and ethical scrutiny. The core objective is ensuring AI supports clinical goals, enhances patient care, and strictly adheres to safety and ethical standards. It is about managing implementation effectively and mitigating negative issues proactively. Effective governance requires knowing what AI tools are used, how, and on whom.

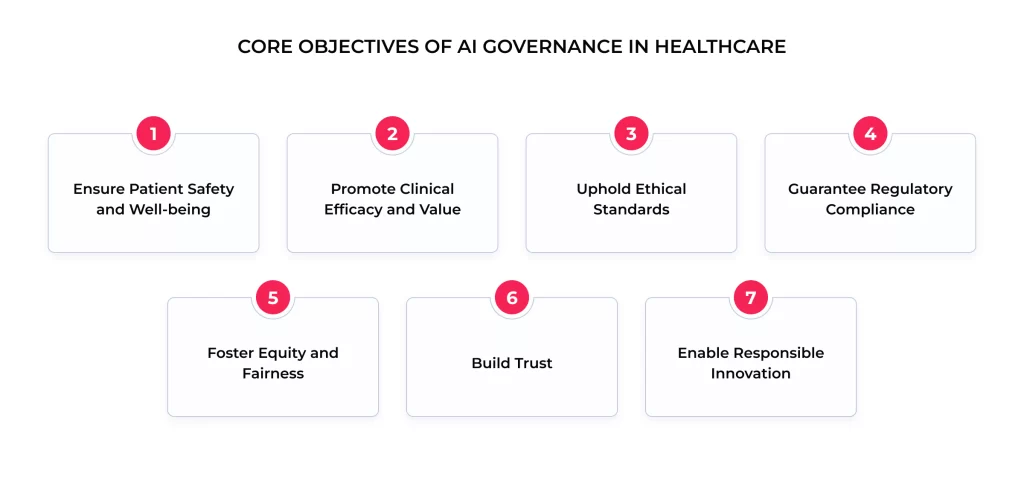

Key Objectives for Healthcare AI Governance

Effective AI governance in healthcare pursues critical objectives, forming a framework for policies and oversight specific to medicine:

- Ensure Patient Safety and Well-being. This is the top priority. Minimize harm risk from AI decisions or failures through rigorous testing, validation, and monitoring.

- Promote Clinical Efficacy and Value. Ensure AI tools are effective and reliable and improve outcomes, diagnostics, or efficiency. Value demonstration is crucial.

- Uphold Ethical Standards. Align AI with medical ethics (beneficence, non-maleficence, autonomy, justice), respect patient rights, and reflect societal values. The ethical review must be integral.

- Guarantee Regulatory Compliance. Ensure strict adherence to all healthcare laws (HIPAA, GDPR) and regulatory guidelines (e.g., FDA).

- Foster Equity and Fairness. Actively address and mitigate algorithmic bias to prevent discrimination and ensure AI benefits all patient populations equitably.

- Build Trust. Cultivate confidence among clinicians, patients, administrators, and regulators through transparency, clear communication, reliability, and accountability.

- Enable Responsible Innovation. Create clear pathways for adopting beneficial AI by managing risks effectively without unduly stifling progress.

Navigating these goals reveals the tension between rapid innovation and the need for safety, ethics, and compliance. Leaders aim to innovate without compromising safety, and mature AI governance in healthcare frameworks balances this. They provide systematic ways to identify risks, evaluate benefits, and implement safeguards. The challenge is creating rigorous yet flexible processes and avoiding bureaucratic hurdles that block valuable technologies. Effective governance seeks this balance, creating a safe space for responsible innovation.

Pillars of Responsible AI in Healthcare Settings

Robust AI governance in healthcare rests on several foundational pillars, providing structure to address the challenges of responsibly deploying AI.

Ensuring Data Privacy and Security

Protecting PHI, confidentiality, integrity, and availability is paramount. AI often requires vast datasets, making stringent data privacy and security non-negotiable. Data breaches erode trust and harm patients.

AI governance in healthcare must embed compliance with data protection regulations (HIPAA, GDPR) from the start (“privacy by design”). Key practices include:

- Secure data collection, storage, and transmission

- Robust role-based access controls

- Techniques like anonymization or pseudonymization where appropriate.

Frameworks like Gartner’s AI TRiSM emphasize Privacy, scrutinizing model architecture, training data, and retention policies. Principles like IQVIA’s “Respect” and Novartis’s “Protect data” highlight the necessary organizational commitment.

Achieving Algorithm Transparency and Explainability

“Black box” AI models, with opaque decision processes, challenge the industry. Clinicians struggle to trust recommendations they do not understand, hindering integration into clinical reasoning. Patients also have a right to understand decisions affecting their health.

Transparency and explainability are thus critical pillars of AI governance in healthcare. The first means clarity on AI design, development, training information, and deployment. At the same time, explainability articulates the reasoning behind a specific AI output. Governance must promote both. That includes using interpretable models when possible, employing techniques to explain complex models, thoroughly documenting designs and limitations, and communicating AI’s role. Interpretability techniques are vital here.

However, achieving transparency and explainability for highly complex models remains difficult. Intricate model logic can defy simple explanations, creating a gap between the ideal and reality. Effective governance needs pragmatic strategies for managing less interpretable models. That involves rigorous validation before deployment, constant monitoring post-deployment, defining acceptable opacity levels based on risk, and ensuring meaningful human oversight.

Promoting Ethical AI and Mitigating Bias

Ethical considerations are core to healthcare. AI must align with ethical principles: beneficence, non-maleficence, autonomy, and justice. AI governance in healthcare must include mechanisms to proactively address ethical implications at every stage.

Algorithmic bias is a major ethical challenge. AI models trained on biased historical data can inherit and amplify these biases related to race, gender, socioeconomic status, etc. That can lead to health disparities, where AI performs poorly or recommends suboptimal treatments for specific groups.

Mitigating bias is a critical governance function requiring continuous effort. Below are some critical strategies for bias mitigation in healthcare AI:

- Diverse and Representative Data. Ensure training data reflects the diversity of the target patient population.

- Bias Audits. Implement regular testing to assess model performance across demographic subgroups.

- Fairness Metrics. Define and use specific metrics to measure fairness, recognizing context matters.

- Algorithmic Adjustments. Explore technical methods to reduce bias, considering trade-offs.

- Transparency in Limitations. Document and communicate known biases or limitations.

- Human Oversight. Maintain human involvement, allowing clinicians to override potentially biased AI recommendations.

These strategies are grounded in ethical guidelines emphasizing fairness.

Establishing Accountability and Liability

Who is responsible when AI contributes to an error or harm? Clear accountability is essential for proper AI governance in healthcare. It enables redress, learning from errors, and building trust.

Assigning accountability is complex. Responsibility could lie with developers, the deploying organization, the clinician, or a combination. AI itself lacks legal personhood. Governance frameworks must define roles, responsibilities, and escalation procedures. Robust audit trails, logging system operations, and user interactions are crucial for retrospective analysis. Surveys show that 65% of organizations with AI governance have established accountability structures.

This challenge highlights potential gaps where existing legal frameworks (like malpractice law) may not adequately cover AI nuances. Distributed decision-making, model opacity, and errors originating in data or design complicate liability. Professional bodies like the AMA advocate for clarity on physician liability and scrutinize payer AI use. This suggests that while internal governance defines accountability structures, broader legal evolution might be needed for comprehensive clarity, especially regarding developer-deployer-user responsibilities.

Mastering Data Through AI Data Governance in Healthcare

Within AI governance, AI data governance is a critical sub-discipline focusing on policies, processes, standards, and controls for data throughout the AI lifecycle. In healthcare, AI data governance is vital due to the sensitivity, privacy requirements, and critical nature of patient health information.

Its primary role is ensuring data quality, integrity, security, privacy, and ethical handling for AI. High-quality data is the foundation of trustworthy AI. Poor AI data governance (leading to bad data quality, bias, and privacy violations) results in flawed models, discrimination, and patient harm. Data issues often impede AI scaling. Robust AI data governance is thus fundamental to successful AI governance in healthcare.

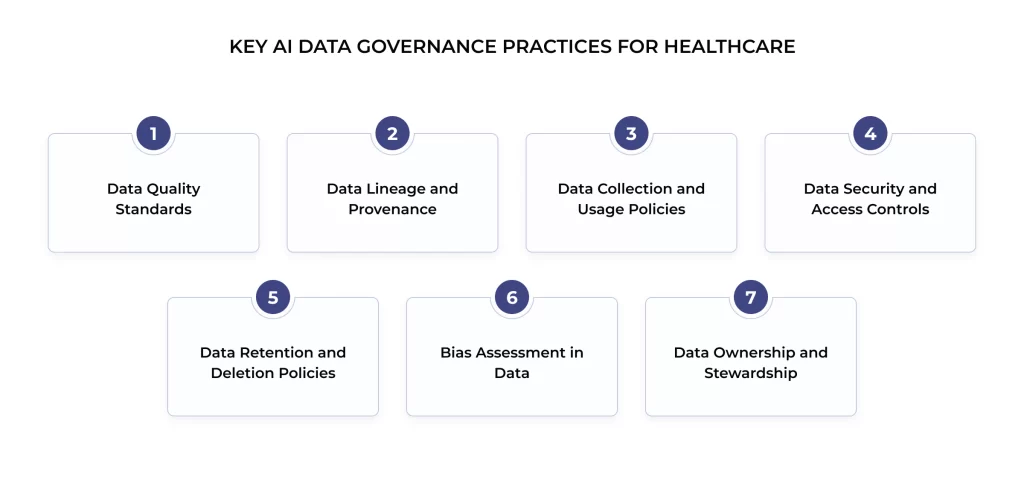

Essential Policies and Practices

Effective AI data governance requires complex policies and practices covering the entire data journey for AI:

- Data Quality Standards. Define accuracy, completeness, consistency, timeliness, and relevance standards for AI use cases, including data cleansing and validation processes.

- Data Lineage and Provenance. Track data origins (provenance) and document transformations and usage history (lineage) for traceability, debugging, and auditing.

- Data Collection and Usage Policies. Establish strict guidelines for ethical data sourcing, patient consent, and permissible data use in AI training, testing, and deployment. Address secondary data use.

- Data Security and Access Controls. Implement state-of-the-art security (encryption, access controls) to protect data from unauthorized access or misuse.

- Data Retention and Deletion Policies. Define how long AI-related data is kept and establish secure deletion procedures.

- Bias Assessment in Data. Integrate procedures to assess datasets for potential biases before use and document findings/mitigation.

- Data Ownership and Stewardship. Clearly define data ownership and stewardship roles, clarifying accountability for managing specific datasets and sharing agreements.

These practices align with frameworks like Gartner’s TRiSM, IQVIA’s principles, and Novartis’ guidelines, addressing widely recognized data challenges. Effective AI data governance requires deep collaboration among diverse stakeholders: data scientists, clinicians, ethicists, legal/compliance teams, and leadership. This multidisciplinary approach is essential to integrate technical needs, clinical knowledge, ethical considerations, and legal constraints.

Operationalizing Governance: The Role of AI Governance Software

As healthcare AI adoption grows, manually managing governance complexity becomes tough. AI governance software covers specialized tools and platforms designed to help organizations implement, manage, monitor, automate, and enforce their AI governance frameworks. These solutions are critical for operationalizing governance, especially as AI model numbers and complexity increase.

The need arises from difficulties in applying governance consistently across diverse AI applications. Manual risk assessment, monitoring, bias detection, and reporting processes are inefficient, error-prone, and hard to scale. AI governance software streamlines workflows, provides centralized visibility, and automates tasks, freeing experts for strategic activities. Frameworks like Gartner’s AI TRiSM recognize tooling’s role, and the need for systematic approaches is clear.

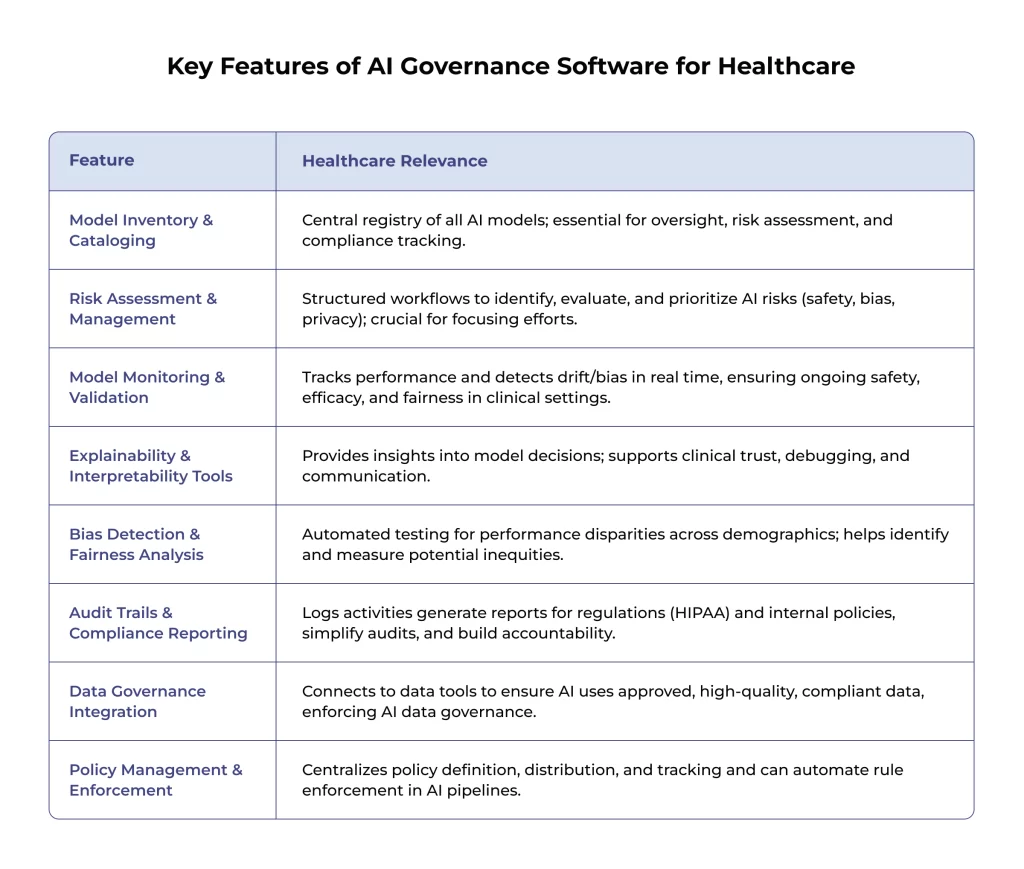

Key Capabilities for Healthcare Needs

AI governance software offers features relevant to healthcare’s specific needs and risks, helping translate policy into practice.

These capabilities collectively address practical AI governance in healthcare challenges, providing transparency, accountability, fairness, and risk management mechanisms. They align with ISO/IEC 42001 standards for AI management systems.

Benefits of Using AI Governance Software

Adopting dedicated AI governance software offers great advantages. It increases operational efficiency by automating and centralizing tasks, allowing teams to manage more models. AI also improves risk management through systematic identification, assessment, and mitigation tools.

Enhanced transparency and auditability build trust and simplify compliance demonstration. Lastly, this software helps organizations mature from ad-hoc to systematic, reliable governance frameworks, fostering confident AI adoption. Users report benefits like more successful AI initiatives and better patient experiences.

Navigating the Challenges of Healthcare AI Governance

Despite the need, implementing effective AI governance in healthcare faces many challenges.

- Complexity is a major hurdle. Understanding diverse AI models, their failure modes, and interactions within clinical workflows requires specialized knowledge. That is compounded by navigating ethical dilemmas, evolving laws, and strict regulations.

- A widespread lack of expertise combining AI, clinical practice, ethics, law, and governance skills hinders proper evaluation, policy design, and oversight.

- Persistent data issues (quality, completeness, availability, representativeness) undermine AI performance and trust. Ensuring diverse data and mitigating bias are ongoing challenges. Over half of organizations feel unprepared data-wise for GenAI.

- The rapid pace of innovation means governance frameworks struggle to keep up. Policies for older AI may not cover newer models like GenAI, requiring adaptation.

- Implementing AI governance in healthcare demands significant resource commitments (time, budget, personnel), which is a barrier for smaller facilities.

- Integration challenges arise when embedding AI and governance into clinical workflows and IT infrastructures. Poor integration hinders adoption and safety.

- Ambiguity around accountability and liability remains a concern, complicating responsibility frameworks.

Effective change management is crucial but often underestimated. Overcoming resistance, ensuring training, and fostering a collaborative culture are essential. Many organizations develop governance reactively (“building the plane as they fly it”), with only 16% reporting comprehensive, systemwide policies.

Strategies for Success

Overcoming these hurdles requires proactive, strategic commitment. Key strategies include:

- Leadership Commitment & Executive Sponsorship. Strong, visible support from leadership is fundamental for resources, direction, and fostering a responsible culture.

- Cross-Functional Governance Committees. Establish bodies with diverse expertise (clinical, technical, legal, ethics, admin) for comprehensive oversight.

- Phased and Focused Implementation. Start with clear objectives for specific use cases, prioritize high-risk areas, and implement governance iteratively.

- Investment in Training and AI Literacy. Build AI understanding across the workforce through tailored training for different roles.

- Adopting Standard Frameworks. Leverage established guidelines (NIST AI RMF, OECD Principles, WHO Guidance) to accelerate internal policy development.

- Continuous Monitoring and Adaptation. AI governance requires ongoing monitoring of model performance, regular audits, and periodic policy updates.

- Rigorous Vendor Management. Implement thorough vetting of third-party AI solutions and vendors, assessing tech, data practices, and governance. Monitor ongoing compliance.

- Focus on Communication and Transparency. Engage all stakeholders (clinicians, staff, patients) throughout the process to build trust, gather feedback, and facilitate adoption.

Employing these strategies helps navigate complexities and build effective, sustainable AI governance in healthcare frameworks.

Final Thoughts

AI offers immense potential for healthcare, enhancing diagnostics, personalizing treatments, improving efficiency, and expanding access. Realizing this potential safely, ethically, and equitably hinges on effective AI governance in healthcare. Without it, risks of harm, data breaches, bias, and eroded trust threaten progress.

The core components, ethical frameworks, meticulous AI data governance, transparency, accountability, and bias mitigation, are essential building blocks for AI systems. They foster the trust needed for successful AI integration and ensure innovation serves human well-being.

Achieving effective AI governance in healthcare requires a proactive, collaborative, adaptive approach involving all stakeholders. These cover developers, organizations, clinicians, staff, patients, and policymakers. It demands sustained investment in resources, training, and continuous improvement, as governance must evolve with technology.

By prioritizing strong AI governance in healthcare, we can shape a future where AI acts as a powerful force for good. This would enhance care quality, improve outcomes, support professionals, and strengthen healthcare system integrity. Tools like AI governance software can help operationalize these frameworks and manage complexity. The path forward requires diligence, collaboration, and a commitment to patient safety and ethics in medical AI innovation.

FAQ

Who is responsible when an AI system makes a mistake in healthcare?

Responsibility is often complex and shared among developers, the deploying organization, and clinicians. Robust AI governance aims to establish clear roles, responsibilities, oversight, and audit trails to help determine contextual accountability. Legal frameworks are still evolving.

How do we ensure AI is used ethically in clinical settings?

Ethical use requires:

– Embedding ethics in governance

– Establishing clear principles (patient well-being, fairness, transparency)

– Creating ethics review processes

– Providing comprehensive staff training on ethical implications

– Continuous monitoring for alignment with ethical standards

How do we manage bias in healthcare AI models?

Managing bias is critical and ongoing. Strategies include:

1. Using diverse training data

2. Conducting regular bias audits across demographics

3. Using appropriate fairness metrics

4. Applying technical mitigation techniques carefully

5. Ensuring transparency about limitations

6. Maintaining meaningful human oversight

Effective AI data governance is key.

Can we trust black-box AI models in clinical decision-making?

Trusting opaque models requires strong safeguards:

– Demanding maximum achievable transparency/explainability

– Rigorous independent validation before deployment

– Continuous post-deployment monitoring

– Ensuring meaningful human oversight with the ability to intervene or override AI recommendations, especially for critical decisions

Trust must be earned through verification.

How do we make AI decisions explainable to doctors and patients?

Explainability involves technical methods and tailored communication. Prioritize interpretable models when feasible. Use techniques like LIME/SHAP for complex models. Develop clear documentation. Some AI governance software offers explanation tools. Explanations must suit the audience — technical for clinicians and clear and impact-focused for patients.

What is the role of interpretability in AI governance?

Interpretability (understanding why an AI decides something) is crucial for AI governance in healthcare. It supports transparency, helps identify bias/flaws, aids debugging, builds clinician trust, and assists accountability. Governance frameworks often link required interpretability levels to application risk.

What kind of data governance policies are needed for AI training?

Robust AI data governance for training needs policies covering:

– Ethical data sourcing and consent

– Rigorous data quality and representativeness assessment

– Strong privacy measures (e.g., de-identification)

– Robust data security

– Clear rules on permissible data usage

– Integrated processes for bias assessment within training data

Who owns the data used to train AI models in healthcare?

Data ownership is complex. Typically, the healthcare provider/patient owns the PHI. Contracts with AI vendors must meticulously define data usage rights, limitations, IP rights (model vs. data), and security/privacy responsibilities. AI governance in healthcare ensures careful management of these agreements.

How do we ensure data quality and integrity in AI systems?

Ensuring data quality requires ongoing AI data governance:

– Defining clear quality standards

– Implementing automated validation checks

– Continuous monitoring for anomalies

– Tracking data lineage

– Performing regular quality audits

– Establishing processes for error correction